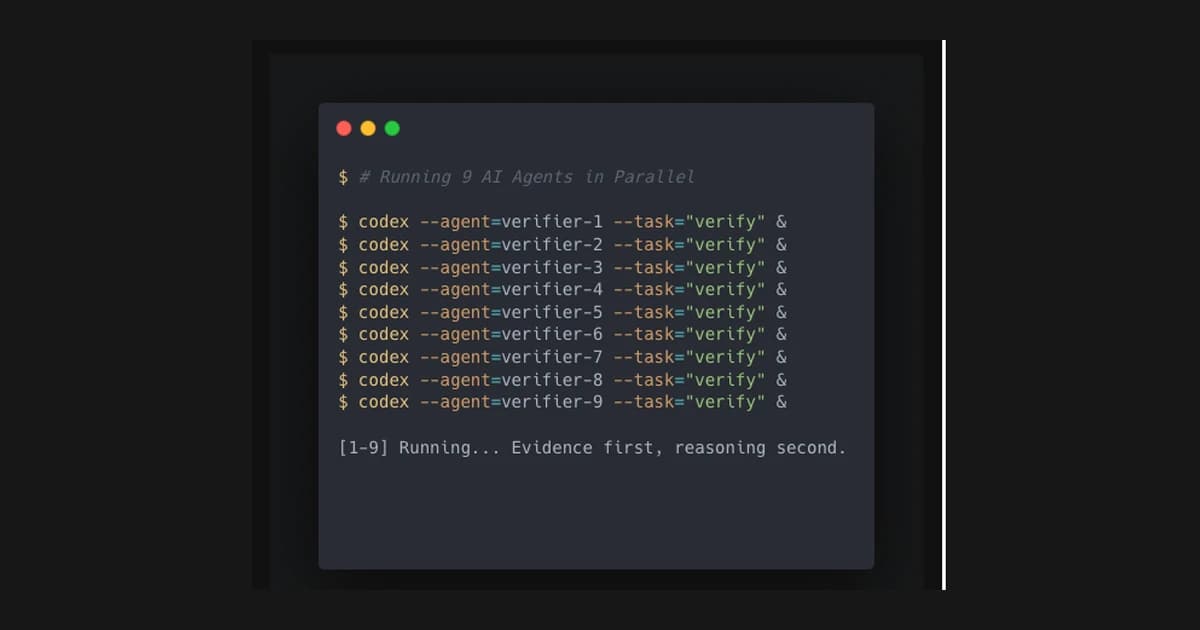

Running 9 AI Agents Simultaneously

A deeper look at running nine agents in parallel, the verification rubric, and the ops lessons that mattered.

Running nine AI agents in parallel should not work. Today it did.

I spawned nine Codex CLI agents to verify claims made by earlier agents. The goal was not speed. It was trust. I wanted independent checks, not one model rubber stamping itself.

This is what the setup looked like, what broke, and why I think verification is the real work in multi agent systems.

Why I did it

I use agents every day. That means I also live with the risk of confident errors. The more you scale output, the more those errors matter.

I needed a way to validate what I was getting back. Not just one agent checking itself, but multiple independent passes using the same rubric. That is the closest thing we have to verification right now.

The setup

The workflow was simple and strict:

- Split the claim list into smaller batches

- Give every agent the same evidence rubric

- Require concrete proof: commands, logs, citations

- Stop once a claim was verified or blocked

The key was consistency. Every agent had to follow the same rules so the outputs could be compared.

The evidence rubric

I wrote a simple rubric before I started. If a claim could not be backed by a command, log, or citation, it did not pass.

That meant no summaries without sources. No hand waving. No "it looks right." Every claim needed a receipt.

This is the difference between research and verification. Research is exploratory. Verification is binary.

What counts as proof

Proof is a command output, a log line, or a concrete source. It is not a paragraph. It is not a vibe. It is a thing you can point at.

When you force that standard, the quality of the output jumps. It also keeps you honest. You either have proof or you do not.

The ops reality

The hardest part was operations. Timeouts meant approvals had to stay active. Workloads had to be small enough to finish cleanly. If you lose the approval window, the agent dies and the work is lost.

That is not glamorous, but it is the reality of running parallel agents today. The system is only as reliable as the runtime controls.

The results

Sixteen of sixteen claims verified. Three discrepancies flagged for follow up. Those discrepancies were the real win because they showed where my earlier assumptions were wrong.

This is why verification matters. It surfaces blind spots, not just confirmations.

The discrepancies were the real value

Three discrepancies were flagged. One was a LinkedIn count that did not match the data. Another was a rebase count that turned out to be off. The last was a claim about a repo state that did not match the filesystem.

None of these were catastrophic, but they mattered. They proved the system was doing real checking instead of agreeing with itself. That is the difference between a useful agent network and a polite echo chamber.

The key lesson

Codex agents do not time out if you run with pty true. That one flag turned the workflow from brittle to reliable. It made long verification chains possible.

That is not a model issue. It is an ops issue. And it changes everything about how you can run multi agent systems at scale.

Why pty true mattered

The pty flag sounds like a small technical detail. It is not. Without it, the sessions timed out and the agents dropped mid task. With it, the workflow stabilized.

That is a reminder that multi agent systems are not just about prompts. They are about runtime. If the runtime is flaky, the system is flaky, no matter how good the models are.

Ops lessons from running nine agents

Approvals are the hidden bottleneck. If the approvals expire, the agent dies and the work is lost. I kept workloads small and staged the runs to avoid that failure.

I also kept an eye on latency. When the system slows down, it is easy to lose track of which agent is doing what. A simple status log saved me more than once.

What surprised me

The surprising part was not that agents could verify claims. The surprising part was how quickly the process surfaced my own assumptions.

I expected the system to confirm what I already believed. Instead it challenged me. That is the real value. A good verification system should make you uncomfortable because it shows you where you were wrong.

Reconciling the outputs

Parallelism is easy. Reconciliation is not.

I required each agent to return a short evidence summary. When two agents disagreed, I manually checked the source. If a claim was blocked, I logged it and moved on.

This is the core of the workflow. The agents do the first pass. The human does the arbitration.

The human role

Humans are the arbiter. The agent can gather and summarize. It cannot decide what matters. That is still on you.

In this workflow, my role was to reconcile conflicts and judge whether the evidence was sufficient. That is the real cost of verification, and it is still worth it.

Verification over vibes

Multi agent systems fail when they prioritize speed over truth. If you build a system that cannot be audited, you are setting yourself up for an expensive surprise.

Logs matter. Evidence matters. The final answer should be traceable back to a source. Anything less is a risk.

Designing for verification

If you are building multi agent systems, design for verification first.

- Define a shared rubric

- Require receipts, not summaries

- Log everything

- Make reconciliation a first class step

Parallelism only helps if you can reconcile the outputs. Otherwise you just get faster confusion.

The verification mindset

Verification is a habit. It is slower upfront, but it saves you later. If you build the habit early, you stop trusting every output by default. You start treating claims as hypotheses until they are proven.

That shift changes how you work. It makes your systems resilient because they are built around evidence, not optimism.

Where people go wrong

Most people scale output before they scale verification. They chase speed and forget the cost of being wrong. That works until the first public mistake.

If your system can publish or decide, it needs a verification step. There is no shortcut around that.

Where this applies

Any workflow that touches your reputation needs verification. Content claims, client deliverables, code reviews, even internal reporting. If you are making decisions based on agent output, you need a way to trust that output.

It does not have to be nine agents. It just has to be a system that can be audited.

The cost of being wrong

When you ship fast, mistakes compound. A bad claim in a post turns into a bad decision. A bad decision turns into a wasted week. This is why verification is not optional.

The system I built is slower than blind automation, but it is faster than cleaning up a public mistake. I would rather spend a few minutes verifying than spend days repairing trust.

That tradeoff is the real reason I care about this.

Scaling beyond nine

More agents is not always better. The bottleneck shifts from generation to reconciliation. That means the real scaling work is not in adding agents. It is in improving the audit trail and the decision process.

If I scale this further, I will automate parts of the reconciliation and tighten the rubric even more. Otherwise you just get more noise.

What I would change next time

I would design the verification rubric before the claims list. I would pre assign which agents check which sources. I would shorten the approval window risk by keeping tasks even smaller.

I would also automate the reconciliation log so I do not have to do it by hand. That is the next bottleneck.

The real point

This was not about proving the agents are smart. It was about proving the system can be trusted.

The difference between a toy and a production system is verification. If you are not designing for that, you are not really building a system. You are just running prompts.

Verification is boring until it saves you. The moment you catch a wrong claim before it ships, the value becomes obvious. That is the entire reason I ran nine agents. I want this to be normal, not heroic.

If you are building with agents, start with verification on day one. It is easier to build it early than retrofit it later. Your future self will thank you. It is the cheapest insurance you can buy. Do it before you scale. Trust scales slower than output. That is the entire point of verification for me.

The question I keep asking

Have you tried parallel agents in production workflows? What broke first?

Related Guides

- AI Agents Setup Guide — Patterns, stacks, and guardrails

- AI Agents for Solo Founders — Build a 24/7 team

- Overnight AI Builds Guide — Safe automation while you sleep

Related Stories

- Running 15 AI Agents Daily — The full architecture

- How I Built 14 AI Agents — From one to many

Learn More

For the complete agent system, join the AI Product Building Course.

Amir Brooks

Software Engineer & Designer